By Bridget K. Burke

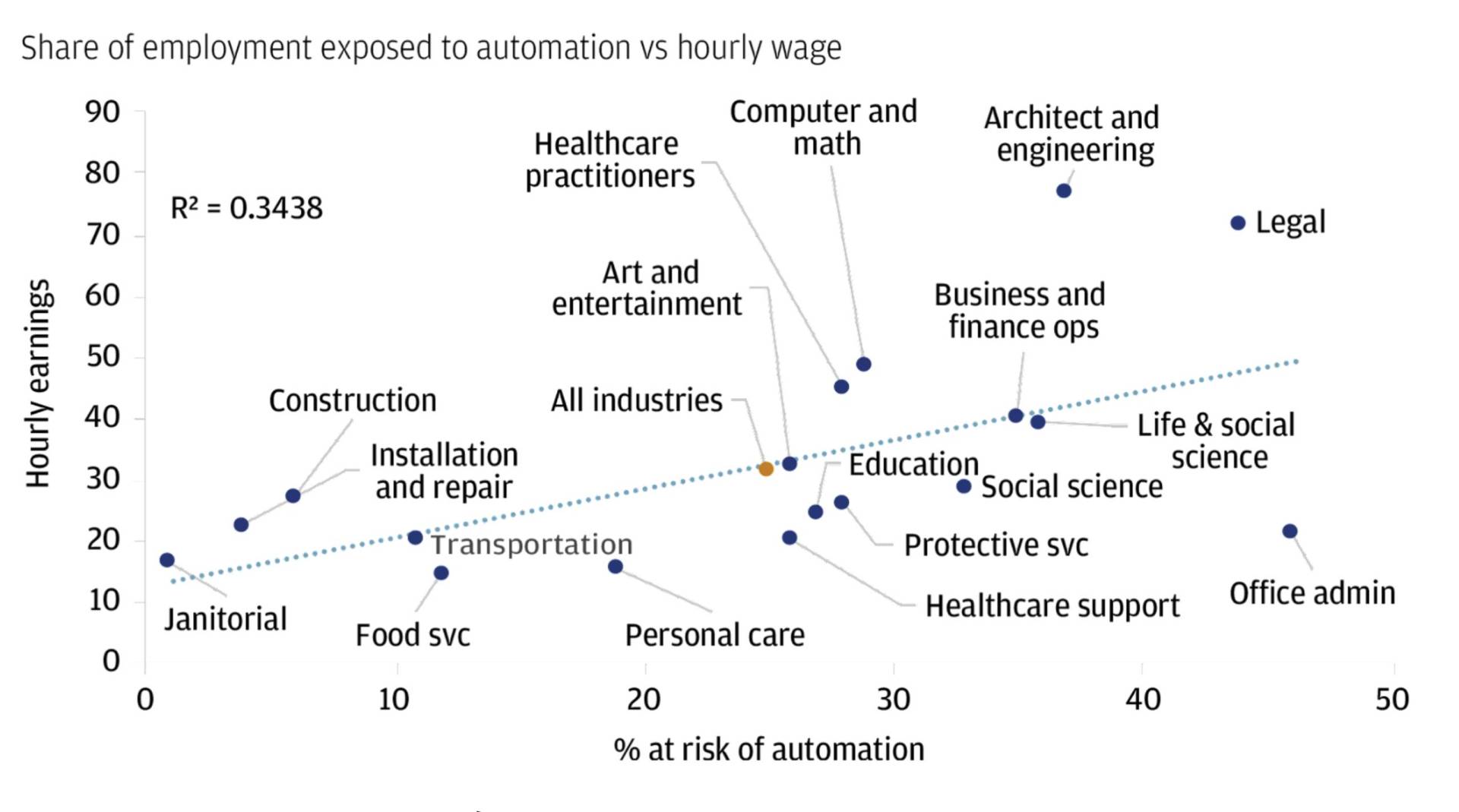

Within 10 years, AI may be able to do “80% of 80% of all jobs that we know of today,” venture capitalist and early OpenAI backer Vinod Khosla boldly told the Wall Street Journal’s Tech Live conference in October 2023. On the more conservative end of estimates, Goldman Sachs has crunched some numbers and estimates that AI could automate about a quarter of the tasks we do today in the U.S. and Europe in the next few years.

Interestingly, it’s the high-knowledge, non-routine jobs, like in healthcare, legal, and financial areas, that are most at risk. (Figure 1) On the flip side, jobs demanding manual labor or those heavy on interpersonal interactions, like construction and personal care, seem to be more resistant to AI automation.

Source: Bureau of Labor Statistics. Data as of April 10, 2023

Considering the broader implications of AI across various industries, strong leadership will look different as AI is integrated into the workplace. Here are three ways to adapt to lead in the AI era.

Advance with AI, But Stay Human

Picture a world where AI isn’t just a buzzword but a teammate, enhancing the human touch in patient care and operational efficiency, reshaping the very fabric of healthcare management. Despite AI’s many capabilities, effective leadership requires uniquely human qualities that AI can’t as yet duplicate. Leaders must blend the wisdom of experience with the insights of AI. The emergence of visionary, human-AI leadership—rooted in a fusion of an open heart, tech-savvy mind, continuous learning, authenticity, and ethical policy expertise— requires that leaders become team-leaders and visionaries.

The best leaders of the future will leverage AI for efficiency and decision-making while also emphasizing human qualities like empathy, openness, awareness, compassion, and wisdom, which are essential for authentic leadership. This delicate balance paves the way for a leadership style that is adaptive, inclusive, and attuned to the nuanced demands of our increasingly digital world.

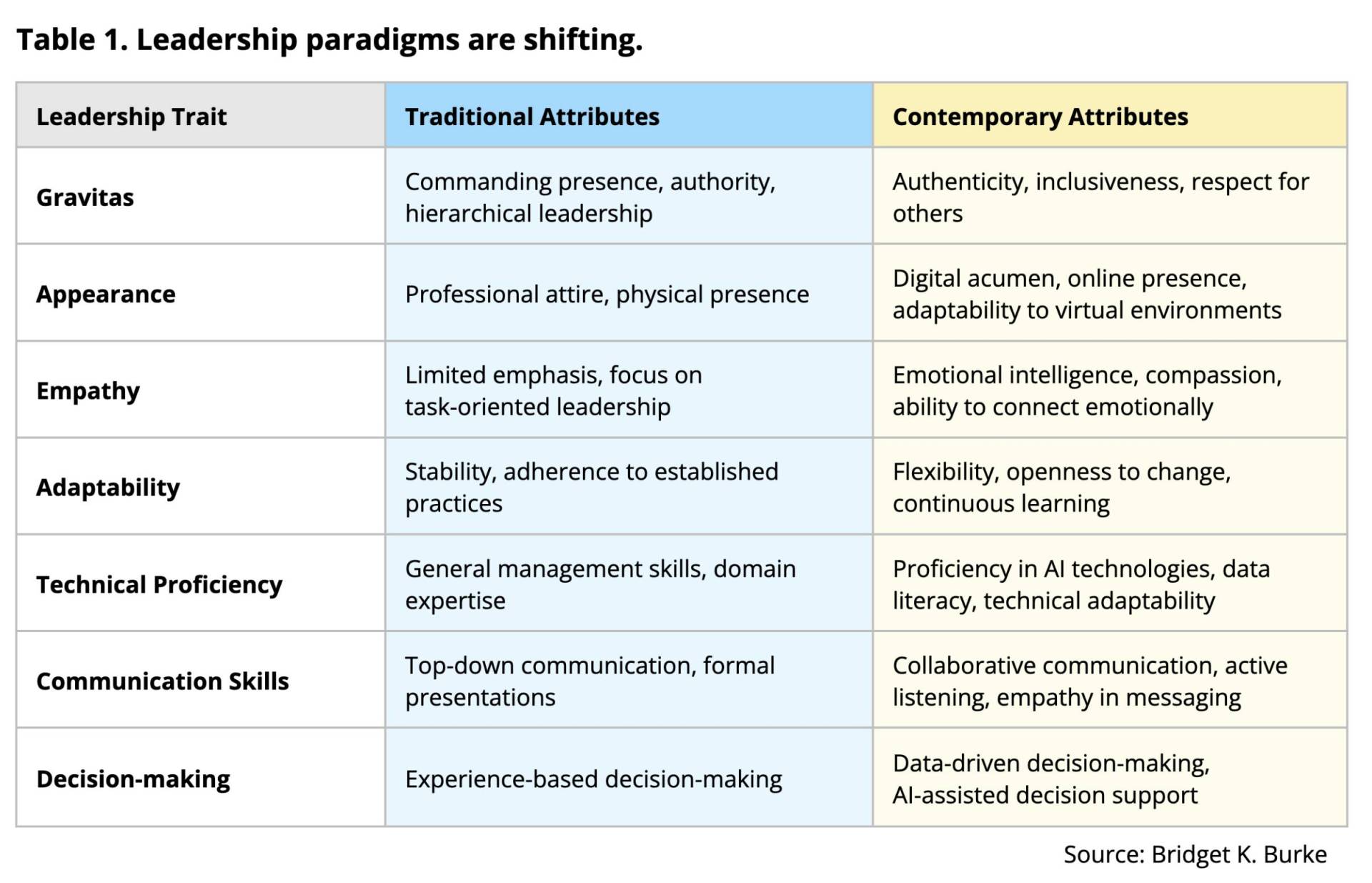

Moreover, the traditional hallmarks of leadership presence like gravitas and appearance are shifting to more contemporary values such as authenticity, with a new emphasis on inclusiveness, respect for others, digital acumen, and the ability to effectively communicate and lead in virtual environments as noted by Sylvia Ann Hewlett in her Harvard Business Review article, “The New Rules of Executive Presence.” She underscores the importance of authenticity, cultivating an open, inclusive online presence, and adapting leadership styles to meet the expectations of a diverse and digital-first workforce (Table 1).

As leadership traits evolve to embrace authenticity and digital proficiency, the advent of AI within organizational teams represents a parallel shift, demanding a redefinition of managerial roles and competencies that leaders need to navigate with care.

Learn for Tomorrow

As healthcare integrates AI, it’s important for leaders to understand how AI can be applied in various healthcare domains. This doesn’t mean leaders need to become data scientists. It does mean that they should develop a robust, working knowledge of AI’s capabilities, limitations, and ethical boundaries. They need to gain a deep, functional knowledge that allows them to make informed decisions and guide their teams effectively. For example, understanding how AI-powered diagnostic tools can analyze medical images, such as X-rays or MRIs, to assist radiologists in detecting abnormalities more accurately and efficiently.

The leader’s goal is to guide with confidence, making informed choices that propel their teams and patient care forward. Initially training leadership is essential, as their understanding is crucial for setting benchmarks and deploying AI effectively. But leaders don’t know everything, so they must listen to, and learn from, the experts. For healthcare leaders looking to enhance their skills in the AI-enabled environment of 2024, several online continuous learning programs, courses, and tools are available. Here are a few notable options:

- Business Applications for AI in Health Care – Harvard University | This certificate of specialization includes two programs chosen by participants from three options, offered online and on-site.

- Artificial Intelligence in Health Care – Massachusetts Institute of Technology (MIT) | This self-paced online course spans six weeks and aims to equip healthcare leaders with a comprehensive understanding of AI in healthcare, including its applications, limitations, and opportunities.

- Transforming Healthcare Through Big Data, Analytics, and AI – Stanford University | This 4-day in-person, immersive program explores the influence of AI technology on leadership, management, and organizational transformation.

Additionally, private companies are now providing virtual reality simulation platforms with immersive experiences that put leaders in the shoes of the team members and/or patients to deepen their understanding and increase their empathy, and new leadership development platforms are providing real-time feedback, personalized learning experiences, and AI-powered simulations to create highly adaptive programs tailored to individual needs.

These programs and courses offer a blend of foundational knowledge and innovative insights into AI’s emerging role in healthcare while their tools provide a first-hand experience of learning with AI as a partner, making them all highly relevant for healthcare leaders seeking to navigate the evolving landscape of healthcare in the coming years. They cover a broad range of topics and experiences from AI applications in healthcare to leadership strategies to real-time personalized learning for a technologically advanced healthcare environment.

Foster a Learning Culture

AI’s landscape is ever-evolving, and so must your team’s expertise and organizational culture.

- Leaders must enable open communication and provide understanding and support as their teams navigate the impact of AI on their roles.

- Leaders should organize workshops or discussions to address their teams’ fears and provide reassurance that AI is meant to enhance their work, not replace their expertise.

It’s about building trust and fostering a culture where every team member feels valued, understood, and supported professionally. It’s crucial for leaders to foster an environment where continuous learning is part of your organizational DNA. Invest now in continuous learning programs for every single role to improve their soft skills and get smart on AI. No exception.

- Bring in experts for workshops on AI from your existing application platforms for hands-on learning with real-world organizational scenarios in your IT training “sandbox,” collaborate with educational institutions on custom courses for your organization, or create an internal learning hub with AI training programs and free resources like AI courses from Microsoft, DeepLearning, Google, and others that use public data for training.

- Provide access to on-demand training platforms like Coursera and edX who have AI courses focused on healthcare and some are even tailored to specific healthcare roles. Here are three popular courses that use public data:

- How to Use ChatGPT in Healthcare | edX

- AI in Healthcare | Stanford University via Coursera

- Artificial Intelligence in Healthcare | MIT via edX

- Encourage personal experimentation on a free Chatbot like ChatGPT, Copilot, Gemini, Perplexity, etc. Give them starter prompts that they can try out on their personal devices using their own data and interests. Example: “You are a healthcare AI expert who knows the perfect 80-20 of learning (learning 80% of the meat with 20% effort). I am in the Healthcare industry, in a <role> and I want to spend 2 hours learning about AI and how it will impact my role in 2024. Create a learning curriculum for me including the topics to cover, exact resources, real-world use cases, and 5 test questions at the end of each section to ensure that I understand the topic. My goal is to learn the basics about AI, understand how it will impact my specific role, and learn about specific real-world use cases related to my role.”

- Facilitate generative AI exploration with fun free games like Google’s Labs’ Say What You See, Poem Postcards, and MusicFX, where they can be creative, collaborative, curious thinkers, while enhancing their AI literacy using public data.

- Help them stay up-to-date on the latest trends and developments in AI within the healthcare industry by covering costs for them to attend conferences and join industry associations like the California Association of Healthcare Leaders (CAHL) where they can participate in healthcare AI educational webinars and in-person local events and national education from the American College of Healthcare Executives.

- Consider giving people a few hours each week on the job to learn AI basics to help them understand how AI is changing their specific role before implementing an AI tool that they will be required to use.

Focus on providing a variety of learning options that make it easy for everyone to upskill at their own pace. Making learning a part of the daily routine will empower your team and strengthen your organizational resilience. As leaders prepare themselves with the knowledge and skills needed to navigate AI integration, it’s equally crucial to stay abreast of evolving policies and regulations shaping the future of healthcare AI.

Shape the Future Through Policy

The regulatory landscape for AI in healthcare is always changing. It is essential for leaders and organizations to stay informed, engage with policymakers, and participate in industry forums. It’s critical that AI in healthcare is equitable and unbiased. Generally, there are concerns about a potential lack of transparency in the functioning of AI systems, the data used to train them, issues of bias and fairness, potential intellectual property infringements, possible privacy violations, third-party risk, as well as security concerns.

Considering the growing concerns regarding AI’s potential misuse and unforeseen consequences, there’s a concerted effort to establish robust national standards. In a landmark move, the Federal government unveiled the inaugural U.S. AI Safety Institute Consortium (AISIC) on February 8, 2024. AISIC is a pioneering coalition poised to shape the future of U.S. artificial intelligence safety and trustworthiness. Headed by U.S. Secretary of Commerce Gina Raimondo, the consortium is tasked with leading initiatives critical to AI’s ethical advancement, including the formulation of guidelines for red-teaming, capability assessments, risk management, safety and security, and watermarking synthetic content. AISIC will be established at the U.S. National Institute for Standards and Technology (NIST) and guide the coalition’s 200 members that include state and local government representatives, non-profits, and international allies, all united in the pursuit of creating a safer AI landscape.

Additionally, on February 26, 2024, NIST released its first major update to its widely used Cybersecurity Framework (CSF) since its creation in 2014 and released a Special Publication 800 – Implementing the Health Insurance Portability and Accountability Act (HIPAA) Security Rule: A Cybersecurity Resource Guide for the healthcare industry. NIST is proactively engaging with both public and private sectors through workshops and discussions, aiming to craft federal standards for AI systems that are not only reliable and robust but also trustworthy.

At the state level, legislators and medical regulators are rushing to keep pace with the challenges related to regulating generative AI. More than 25 states have introduced AI-related bills during the 2023 legislative session. These laws and resolutions range from Connecticut’s mandate for inventory and ongoing assessment of AI systems in state agencies to prevent unlawful discrimination, to Louisiana’s resolution for studying AI’s impact on operations, procurement, and policy. Moreover, initiatives like Maryland’s Industry 4.0 Technology Grant Program and the establishment of AI advisory councils in states like Texas underscore a comprehensive approach to integrating AI responsibly across various sectors.

In California, the legislative landscape is particularly active, with numerous bills pending that address a wide spectrum of AI applications, from automated decision systems in state agencies to the impacts of social media on mental health. These efforts reflect a nuanced understanding of AI’s implications, emphasizing responsible use, ethical considerations, and the safeguarding of individual rights amidst technological advancements (Table 3).

Table 3. Recent California Artificial Intelligence Legislation.

| Bill | Title | Summary | Status | Category |

| A 302 | Department of Technology: Automated Decision Systems | Requires the Department of Technology, in coordination with other interagency bodies, to conduct, on or before specified date, a comprehensive inventory of all high-risk automated decision systems, as defined, that have been proposed for use, development, or procurement by, or are being used, developed, or procured by, state agencies. | Enacted | Government Use |

| A 331 | Automated Decision Tools | Relates to the State Fair Employment and Housing Act. Requires a deployer and a developer of an automated decision tool to perform an impact assessment for any such tool the deployer uses that includes a statement of the purpose of the tool and its intended benefits, uses, and deployment contexts. Requires a public attorney to, before commencing an action for injunctive relief, provide written notice to a deployer or developer of the alleged violations and provide a specified opportunity to cure violations. | Pending | Impact Assessment; Notification; Private Sector Use; Responsible Use |

| A 459 | Contracts Against Public Policy: Personal | Provides that a provision in an agreement between an individual and any other person for the performance of personal or professional services is contrary to public policy and deemed unconscionable if the provision meets specified conditions relating to the use of a digital replica of the voice or likeness of an individual in lieu of the work of the individual or to train a generative artificial intelligence system. | Pending | Private Sector Use |

| A 1282 | Mental Health: Impacts of Social Media | Requires the Mental Health Services Oversight and Accountability Commission to explore, among other things, the persons and populations that use social media and the negative mental health risks associated with social media and artificial intelligence. Requires the commission to report to specified policy committees of the Legislature a statewide strategy to understand, communicate, and mitigate mental health risks associated with the use of social media by children and youth. | Pending | Responsible use |

| A 1502 | Health Care Coverage: Discrimination | Prohibits a health care service plan or health insurer from discriminating based on race, color, national origin, sex, age, or disability using clinical algorithms in its decision-making. | Pending | Health Use; Private Sector Use |

| ACR 96 | 23 Asilomar AI Principles | Expresses the support of the Legislature for the 23 Asilomar AI Principles as guiding values for the development of artificial intelligence and of related public policy. | Pending | Government Use; Private Sector Use; Responsible Use |

| AJR 6 | Artificial Intelligence | Urges the U.S. government to impose an immediate moratorium on the training of AI systems more powerful than GPT-4 for at least six months to allow time to develop much-needed AI governance systems. | Pending | |

| S 294 | Artificial Intelligence: Regulation | Expresses the intent of the Legislature to enact legislation related to artificial intelligence that would relate to, among other things, establishing standards and requirements for the safe development, secure deployment, and responsible scaling of frontier artificial intelligence models in the state market by, among other things, establishing a framework of disclosure requirements for AI models. | Pending | Effect on Labor, Employment; Responsible Use |

| S 313 | Department of Technology: Artificial Intelligence | Requires any state agency that utilizes generative artificial intelligence to directly communicate with a natural person to provide notice to that person that the interaction with the state agency is being communicated through artificial intelligence. Requires the state agency to provide instructions to inform the natural person how they can directly communicate with a natural person from the state agency. | Pending | Government Use; Oversight, Governance |

| S 398 | Department of Technology: Advanced Technology: Research | Provides for the Artificial Intelligence for California Research Act, which would require the Department of Technology, upon appropriation by the Legislature, to develop and implement a comprehensive research plan to study the feasibility of using advanced technology to improve state and local government services. Requires the research plan to include, among other things, an analysis of the potential benefits and risks of using artificial intelligence technology in government services. | Pending | Government Use |

| S 721 | California Interagency AI Working Group | Creates the California Interagency AI Working Group to deliver a report to the Legislature regarding artificial intelligence. Requires the working group members to be Californians with expertise in at least two of certain areas, including computer science, artificial intelligence, and data privacy. Requires the report to include a recommendation of a definition of artificial intelligence as it pertains to its use in technology for use in legislation. | Pending | Studies |

| SCR 17 | Artificial Intelligence | Affirms the state legislature’s commitment to President Biden’s vision for safe artificial intelligence and the principles outlined in the Blueprint for an AI Bill of Rights. Expresses the Legislature’s commitment to examining and implementing those principles in its legislation and policies related to the use and deployment of automated systems. | Adopted | Responsible Use |

Source: StateNet

Healthcare organizations can benefit from improving AI governance now to avoid future legal, reputational, and financial damages, positioning themselves as trusted providers. They should champion the “FAVES” principles supported by ONC, ensuring that AI applications in healthcare are Fair, Appropriate, Valid, Effective, and Safe, thus aligning with the U.S. Federal Administration’s vision for a future where technology enhances, rather than detracts from, the human element of care.

Leaders should consider consulting with regulatory affairs and ethics experts to keep their organizations ahead of the government curve. An ethics committee could oversee AI initiatives, ensuring that ethical considerations are always prioritized. There are also several new AI regulation resources online that organizations can utilize. Here are three useful sites:

- AI State Laws Tracker | Electronic Privacy Information Center (EPIC)

- Executive Order Tracker | Stanford University Human-Centered Artificial Intelligence

- Global AI Legislation Tracker | International Association of Privacy Professionals (IAPP)

It’s clear that healthcare organizations must take the initiative in this evolving field and they must develop their AI governance in order to navigate the changing administrative landscape, ensure compliance, and avoid future pitfalls. As leaders engage with policymakers and advocate for ethical AI use, they play a crucial role in advancing the future of healthcare.

In summary, it’s clear that the integration of AI in healthcare presents exciting yet complex challenges. The key message is the pivotal role of leadership in steering this transition. Leading in healthcare in the era of AI requires a delicate balance of embracing technology while prioritizing human-centric leadership. It’s about leveraging AI to enhance patient care and operational efficiency without losing sight of the human touch that is fundamental to healthcare. Leaders must be visionaries, adept at blending AI insights with human empathy, and fostering an environment where continuous learning and innovation are valued. Equally important is navigating the evolving regulatory landscape and addressing ethical considerations to ensure AI’s integration is equitable and enhances the human element in care. This blend of technical savvy, policy acumen, and enduring human qualities will define the future of healthcare leadership, and make it an exhilarating time to be at the helm of a healthcare system.